Introduction

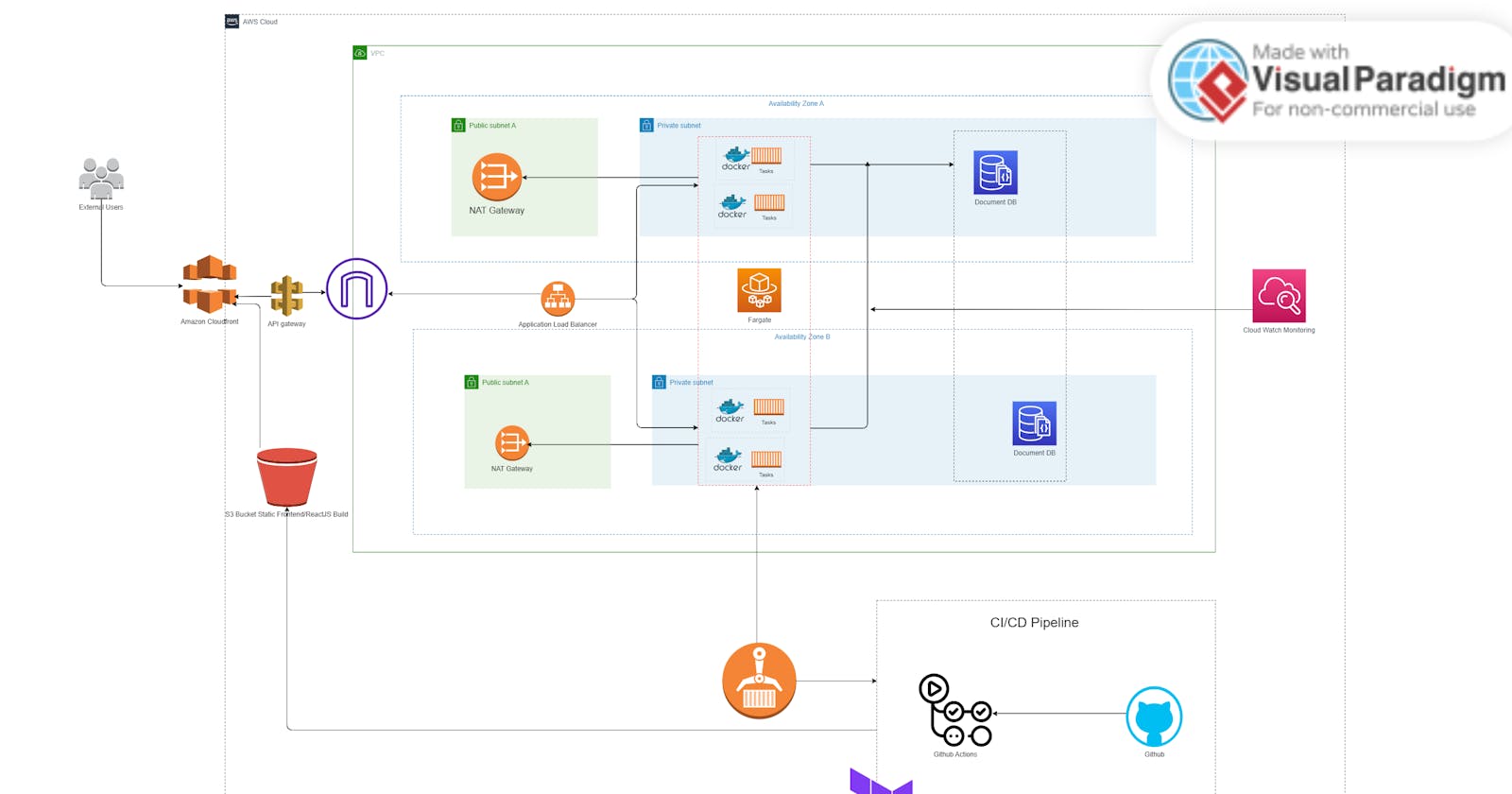

Hello, and welcome again to my blog. In this article, I have finally chosen a suitable architecture on AWS that I will use to deploy the codebase that I have. This codebase is a MERN (MongoDB ExpressJS ReactJS NodeJS) stack application that needs to be deployed on AWS with certain requirements.

Essential Criteria for Application Deployment

The application deployment has certain requirements that are to be met due to business requirements, the code base language, and cost. Based on the business requirements, the deployment on AWS should

Support Microservice Architecture

Secure and Scalable

DevOps Pipeline

The Team has limited Infrastructure Capability

Monitoring

Cost efficient.

To resolve these prerequisites with the architecture I have designed, I'll be breaking down each of these prerequisites with the features and services AWS offers.

Support Microservice Architecture

What exactly is microservice architecture? In simple terms, a microservice architecture is a type of application architecture where the application is developed as a collection of services. This aims to separate services to be independent. For this architecture, the frontend (ReactJS) is being served from an s3 bucket, while the backend (ExpressJS and NodeJS) is separately computed on Docker containers on ECS. The Mongoose Database is deployed on AWS managed service for MongoDB which is Document DB. Having this method of deployment allows the application to independently exist and run without computational dependence. So for example if the Database fails, the remaining part of the application still runs, while it waits for the database to heal or get fixed.

Secure and Scalable

Let's begin with the secure part of things. First of all, the application is designed not to directly have access to the internet, so our resources are not directly exposed to access by users over the internet. The architecture achieves this by, utilizing the networking services that AWS offers to isolate resources and enhance connectivity. The architecture uses services like VPC, Subnets, Route Tables, Internet Gateway and NAT gateway.

On the Scalability part, the architecture will be utilizing AWS's service for scalability to Automatically scale the resources based on the current load. This feature will be used in the Backend/API section where the docker containers will be automatically scaled. AWS Autoscaling will be the game changer here Instead of guessing for capacity.

DevOps Pipeline

My favorite part! The Architecture is designed to adapt and encourage DevOps practices in the whole process of automatically deploying the code. The architecture will be deployed using Infrastructure as Code and the changes made to the infrastructure will be tracked using Git. The Ci/CD pipeline of the software/application will be done with the aid of GitHub Actions to automatically provision and deploy various versions of the application.

Every Tier or part of this application, the Frontend, Backend and Database will be automatically managed and deployed using the CICD pipeline, to release new changes, tests and deploy newer versions.

Limited Infrastructure Capability

The previous architecture I plan to deploy this code base on was the same as this but this time, the database runs on docker containers on ECS with a file system mounted on them as a volume to persist data. However looking at the limited capability that the organization has, I chose to use DocumentDB to manage the workload that those sets of containers were to manage. This cut down the costs of running another set of containers on serverless computing.

This solution allowed the organization to only pay for the specified computing capacity that they use. While utilizing AWS FARGATE as the compute service, they can specify the memory and vCPU needed without having to manage the whole machine.

Monitoring

The architecture is designed to utilize AWS's service for monitoring CloudWatch. The monitoring tool will take metrics and logs from both the backend/API service and the Database services. This is going to aid in debugging and enhance the knowledge of the state of the application.

Cost Efficient

Cost is one core thing to note when deploying applications on the cloud. We do not want to land on an extra large bill that the organization cannot cover or cater for, so we use AWS billing services to Estimate costs and set Billing Alarms and Quotas. Let's break down the estimate of how much this infrastructure will cost if it is deployed on AWS. For this demo, we assume the organization has $1000 to spend on infrastructure per Annum and to show the cost breakdown, we'll use a service provided by AWS to calculate the cost of running this infrastructure.

From the breakdown of the cost of running each of these resources, the Database is where most of the money goes because of the storage and memory capacity of the database. Up the price triangle is the Load balancer that will traffic load to each of the backend containers/tasks using the IP addresses of these tasks.

On the frontend part of things, storage on the bucket for the static content cost is relatively small compared to the other services and the cloudfront distribution is free for the first 1TB of data in any given month which is enough for what we need.

Conclusion

This deployment was designed with the concept of the AWS well-architected framework keeping Availability, Elasticity, Reliability, Performance, Sustainability and Cost Optimization. I aim to use this deployment to explore cloud-native deployments and how secrets can be efficiently handled in the cloud.

This is my first architecture design, so I would appreciate your corrections or additions. You can kindly reach out to me on twitter :) I hope to improve my cloud and DevOps skills by taking on this self-paced exercise. Thank you for reading.